We host one of the largest dedicated collections of systematic reviews on the web, with over 550,000 reviews available. With this vast resource comes a responsibility: ensuring that users critically assess the validity of the systematic reviews they access.

Systematic reviews sit at the top of the evidence pyramid/hierarchy, but their inclusion in this category does not automatically guarantee high-quality evidence. While well-conducted systematic reviews deserve their status, many are poorly executed and risk misleading users.

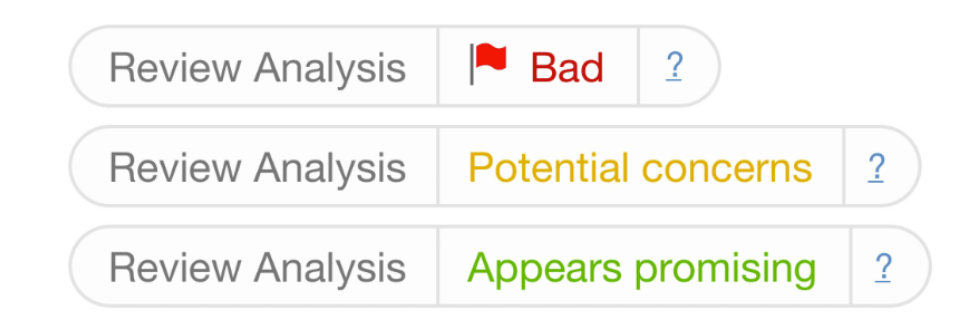

To address this, we have developed a scoring system designed to help users gauge the reliability of systematic reviews. By identifying markers of both rigorous methodology and potential weaknesses, this system will generate a score displayed alongside each review, providing a quick and informed assessment of its credibility.

These scores may be controversial, and we recognize the limitations of any scoring system. To ensure transparency, we provide an explanation of each scoring element and how it impacts the final score. Ultimately, the primary aim of this system is to encourage healthy scepticism among our users.

Elements

Age of review?: The older the review the more likely new research has been published, meaning the systematic review out of date. Not exact as there might be no new research, but hopefully the sentiment is clear.

- 0-2 years = Good

- 3-5 = OK

- 6+ = Cause for concern

Number of Authors?: Systematic reviews require rigorous methodology, and having a single author raises concerns about bias and robustness. While more isn’t always better, a minimum of three authors is generally seen as best practice.

- 1 author = Red flag

- 2 authors = Cause for concern

- ≥ 3 = No concerns

Databases Searched ?: A systematic review should search multiple databases to minimize bias and ensure comprehensive coverage of relevant literature. A review relying on just one database is highly problematic, and even two may be insufficient.

- 1 database = Bad

- 2 = Cause for concern

- ≥ 3 = No concerns

Mention of registration?: Registering systematic reviews (e.g., in PROSPERO) is considered best practice. Reviews published before PROSPERO’s launch in 2011 won’t have this option, but they may already be affected by the Age of Review criterion.

- Mentions PROSPERO or similar registry = Good

- No mention of registration = Cause for concern

Mention of quality tools ?: Quality assessment frameworks such as GRADE, AMSTAR, and PRISMA enhance systematic review rigor. Their inclusion signals a commitment to high methodological standards.

- Mentions any of these tools = Good

- No mention = Cause for concern

Retraction watch check for the article and referenced work?: We check the Retraction Watch database for articles that are included in systematic reviews. Any retraction gets a Red Flag, regardless of the contribution to the systematic review.

- No retractions = No cause for concern

- Any retractions = Red flag

Each element is given a score and these are combined to assign one of three scores:

Some obvious issues with the score, here are a handful, I’m sure others will highlight other issues:

- Abstract-based assessment – The system evaluates what is explicitly mentioned in the abstract, not the full text.

- Text-matching accuracy – Automated detection of databases, PROSPERO registration, etc., may not be 100% accurate.

- Reliance on DOIs – Missing digital object identifiers (DOIs) can disrupt the scoring process.

- No text – Our system needs text to analyse, so no text = no score. Text might be absent if there are redirects, or other website anomalies.

- Arbitrary thresholds – The cutoffs for each category are subjective and may evolve over time.

Whitelisted sources

For a number of trusted publishers we default to ‘Appears promising’, these are typically national bodies such as IQWiG, CADTH, NICE and Cochrane. We still look for retractions (where the publication has a DOI) and will ‘red flag’ them if there is a retraction.

Summary

So, there you have the scoring system, flaws and all. Despite these imperfections, we are excited to release this scoring system. Its primary purpose is to encourage critical thinking, ensuring that users do not accept systematic reviews at face value but instead engage with them sceptically and thoughtfully.

4 Pingback