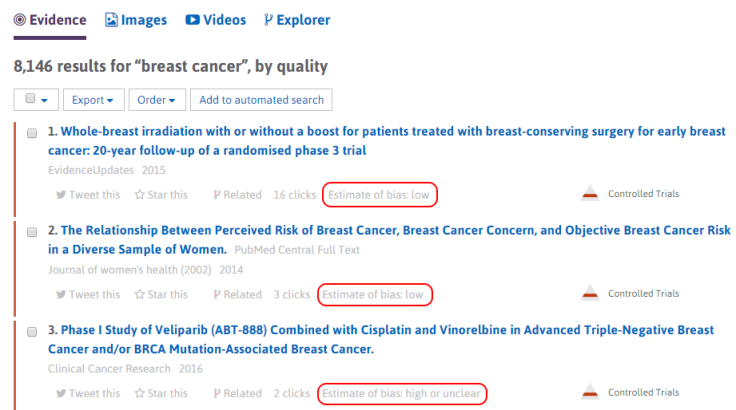

At Trip we like to ‘muck around’ with new techniques to make the site even better. Sometimes there is a clear reason and other times it’s just to explore these techniques to see what they can offer. Currently we’re doing lots of work involving machine learning and recently we released our work on the automated assessment of bias in RCTs. But a few other things we’re involved in:

Word2Vec: Completely speculative and I have no idea what the output will be (I believe that it looks for similarities and relationships between words/concepts). This is working with Vienna University of Technology (TUW) as part of our Horizon 2020 funded KConnect project. There is loads of hype around this technique so we thought it was too good an opportunity to not get involved.

Learning to Rank: Again with TUW this is a much more understandable technique. It is a machine learning technique used to improve the search results. It’s one of a number of algorithm tweaks we’re attempting and all will be thoroughly tested using interleaving or A/B testing (probably the former).

Document summarisation: Another speculative venture. Yesterday I saw that Google have opened up something called TensorFlow to support document summarisation. This is something I’ve been interested in for a while so I contacted my freelance machine learning contact and we agreed to give it a go (he did most of the work on our 5 minute systematic review system). I’m not sure how document summarisation fits in with Trip but seeing outputs can only help me figure it out.

Hopefully we’ll start seeing results on all these projects before the end of the year.

One important thing to point out (and something I relish) is Trip’s ability to get involved in these projects and get things moving quickly. The document summarisation work was set up within 12 hours of seeing the announcement of the TensorFlow being opened up (I’d never even heard of it before). One can only imagine the bureaucratic steps a large organisation would need to go through to even start considering these ground-breaking initiatives.

Trip plays an important role in the health information retrieval ecosystem as we are so innovative. Larger, better funded, members of the ecosystem observe and copy/adopt where we succeed. It’s classic diffusion of innovations. I much prefer being at the front of the adoption curve!

Recent Comments