A long standing issue with our automated review system (and see these examples for acne and migraine) is trying to understand where it fits in the evidence ‘arena’. In other words how do we position it so people understand what it is and how they might benefit from it.

To help us we’ve asked a number of colleagues about the system and how they might use it. Three bits of feedback, both from doctors, encapsulate the thinking:

Doctor One

A super fast (but not exhaustive nor systematic) screening tool to search for useful (or not useful) therapies.

e.g. If I have a patient with X disorder and I am familiar with 1 or 2 therapies yet the patient is not responding and is willing to try other alternatives. This seems like a much quicker way of getting potentially useful alternatives (and afterwards begin a more detailed search based on suggested trials) than reading pages and pages of pubmed results.

Doctor Two

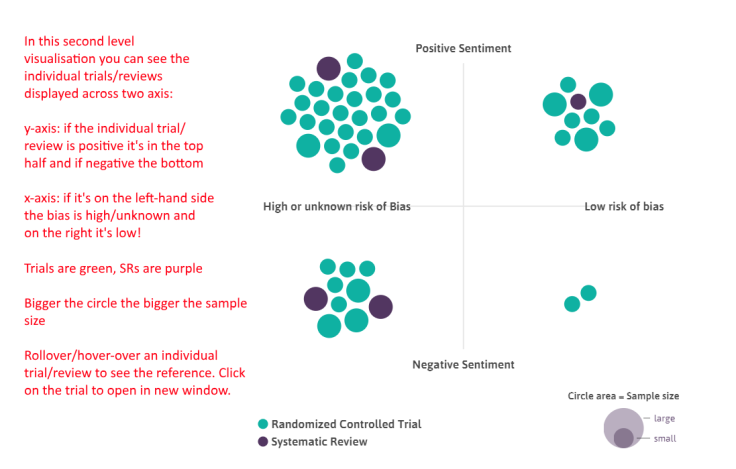

For me, as a GP, I wouldn’t trust the results of this to decide on what to do. But that’s probably not the point (and I’d go to a guideline or systematic review anyway). The system is great for exploring of evidence, being able to visualise the evidence-space. I think the title ‘auto-synthesis’ probably doesn’t do the tool any favours, since you’ll just get a load of people saying ‘no it’s not…’ (not that being controversial is necessarily a bad thing!) If you do a pubmed search for something you’ll get 100s results, and it’s totally unmanageable. Here you have a system which presents a single visualisation, which prioritises RCTs and SRs (so up the pyramid), and makes some assessment of quality (to help prioritise), and auto does the PICO bit. All very cool, very useful, and impactful, but just maybe a tweak to the marketing/usage message.

Doctor Three

Personally I’ve found it clinically useful lately in a couple of ways…

1) A good short-cut to see what treatments have been studied for a condition

2) Related to #1, I suppose, I ‘ve also found it a quick way to find if a PARTICULAR intervention has been studied.- eg, for a patient with delirium, I was wondering whether melatonin had been studied for hospitalized elderly patients, so after searching on delirium and melatonin, (https://www.tripdatabase.com/autosynthesis?criteria=delirium&lang=en), I was able to search further by expanding the Melatonin bubble. I find it particularly useful to be able to expand the bubbles, then link directly to pub med article entries.

So, all say roughly the same thing – it’s an evidence exploration tool. Imagine if you searched for ‘acne’ on Trip, Medline, Google etc. It gives you search results but no sense of the evidence base in that area.

So, to us, it seems like an evidence exploration tool but is it actually an evidence map? We did played with the idea of Trip Overviews of Evidence (TOE) but we’re not sure! But we’ve had various suggestions – please help us pick:

One other suggestion, which is so good, but the acronym is less good: Automated Review and Synthesis of Evidence

If you’ve anything else to add then either email me (jon.brassey@tripdatabase.com) directly or leave a comment below.

Recent Comments