At AskTrip, we often see clusters of very similar or overlapping clinical questions submitted by users. When this happens, we like to review the answers side-by-side to check for consistency and to understand how our system handles closely related prompts. Recently, we noticed several questions about whether vitamin C should be taken with oral iron, so we ran them together to compare the outputs. While the core clinical message across the answers is the same, the emphasis and level of detail vary in interesting ways.

Q1. How much vitamin C is required to enhance oral iron absorption?

Clinical Bottom Line:

Vitamin C can enhance iron absorption, with suggested doses ranging from 100 mg to 500 mg. However, evidence on its additional benefit with iron supplements varies, indicating further individual assessment may be needed for supplementation decisions.

Q2. What are the recommended best practices for oral iron supplementation in patients with anemia, considering the addition of vitamin C?

Clinical Bottom Line:

While vitamin C is often suggested to enhance the absorption of oral iron, current evidence does not conclusively support its clinical benefit in treating iron deficiency anemia. More research is needed to establish definitive guidelines.

Q3. Does vitamin C help with iron absorption?

Clinical Bottom Line:

Vitamin C enhances the absorption of non-heme iron, but its impact on clinical outcomes in anemia treatment alongside iron supplements is inconclusive based on current evidence.

Q4. What is the effect of ascorbic acid on iron absorption in patients with iron deficiency anemia?

Clinical Bottom Line:

The evidence suggests that ascorbic acid does not significantly enhance the effectiveness of iron supplements in improving hemoglobin or ferritin levels in patients with iron deficiency anemia. Further research is needed to clarify its role.

So, Do These Answers Say the Same Thing?

In practical terms, yes. All four answers point toward the same actionable advice for clinicians:

- Vitamin C does enhance iron absorption biochemically.

- But clinical trials have not shown meaningful improvements in hemoglobin or ferritin when vitamin C is added to oral iron therapy.

- Therefore, adding vitamin C is optional, not essential.

- It may still be useful in people with low dietary vitamin C intake or when iron is taken with food, but it is not required for most patients.

Where the answers differ is in emphasis. Some focus more on the theoretical mechanism, others on dosage, and others on clinical outcomes.

Why Do the Answers Differ?

Although all four answers communicate the same core clinical message, the differences in tone, emphasis, and detail reflect the non-deterministic nature of large language models (LLMs). These models don’t retrieve fixed responses; instead, they generate answers dynamically by predicting plausible language patterns based on their training data and the prompt. As a result, even when the underlying evidence is the same, each answer may frame the issue slightly differently, highlighting certain studies, focusing more on mechanisms or clinical outcomes, or varying in how strongly the conclusions are stated. This variability is normal for LLMs and explains why answers can align on substance while differing in style or emphasis.

Final Thoughts

This small experiment highlights both the strengths and quirks of AI-generated clinical answers. Even when content is broadly aligned, the framing can shift subtly, which matters if you’re a clinician looking for crisp guidance. The good news is that, in this case, the core message across all four answers is consistent and clear: iron works on its own, and vitamin C is optional.

UPDATE

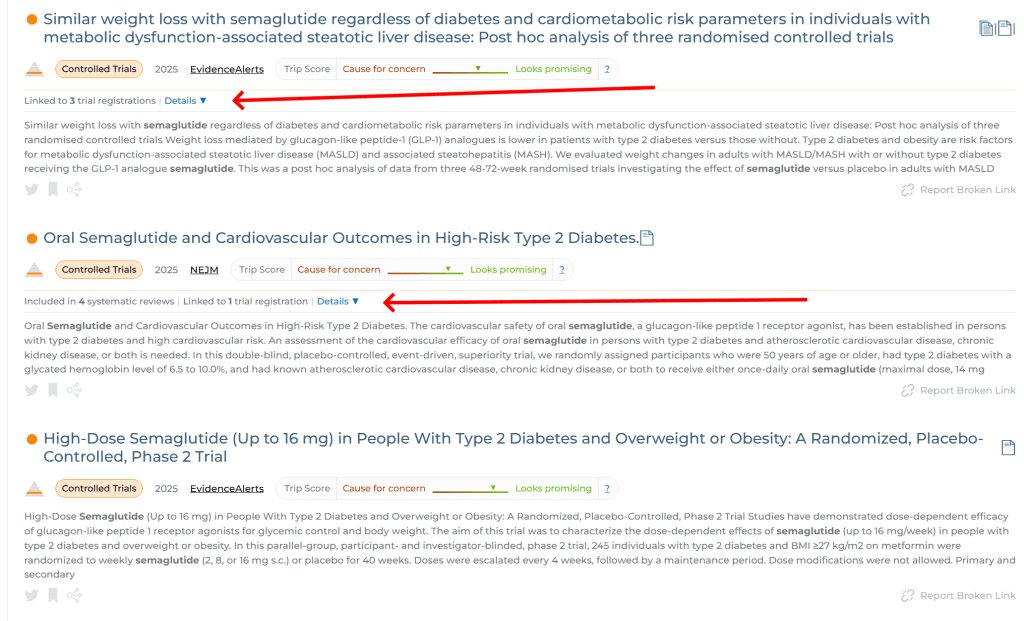

Not long after posting we were contacted by a user asking about reference overlap, an excellent question! I asked ChatGPT to analyse the overlap (it feels less biased than me doing it): “Across the four Qs, there is a strong shared core of evidence—mainly one major RCT and one or two meta-analyses—plus a recurring guideline. Around this core are unique additions tailored to each question’s angle (mechanistic vs. clinical).“

I’m fairly reassured by this extra analysis!

Recent Comments