Yesterday was a momentous day for AskTrip. We recorded the highest number of clinical questions ever asked in a single day – 113 in total.

That’s 113 moments where a health professional turned to AskTrip for support: to check a management decision, clarify a diagnosis, weigh risks and benefits, or simply explore the evidence behind a difficult case. To mark the occasion, we took a closer look at what those questions were about – and what they reveal about the daily reality of medicine.

The Constant Search for Better Treatment

It’s no surprise that most questions revolved around treatment and therapeutics. Clinicians want to know: What’s the best, safest option for my patient?

- Should methotrexate be taken at a particular time of day?

- Is aspirin a valid long-term option after anticoagulation for pulmonary embolism?

- What are the benefits and risks of SGLT2 inhibitors in elderly patients with diabetes and heart failure?

These queries show not just an appetite for the latest trials and guidelines, but also a desire to tailor care to unique patient circumstances — like whether stenting is safe in someone with a nickel allergy or why bile acids might be elevated after a cholecystectomy when bilirubin is normal.

Surgery: When to Cut, and How to Do It Better

Surgery questions revealed two strands of curiosity: when to intervene and how to do it better.

- Should a neonatal hernia be repaired early, and if so, when?

- Should proximal humerus fractures be managed surgically or non-surgically?

- How does robotic prostate surgery compare to conventional approaches in cost and outcomes?

- Is transoral thyroidectomy a safer, less invasive option than open surgery?

These questions highlight a thoughtful balancing of risks and benefits, as well as a hunger for innovations that promise quicker recovery and fewer complications.

The Rise of “Prehab” and Non-Drug Strategies

One of the strongest clusters was around prehabilitation — preparing patients physically and mentally before major interventions like surgery or CAR-T therapy.

- What is the evidence for prehabilitation in thoracic surgery?

- How does it affect recovery after esophagectomy or cancer treatment?

This shows a shift from reactive medicine to proactive strengthening, where the goal is not just survival but resilience and long-term outcomes.

Other questions highlighted rehabilitation and lifestyle: the role of exercise in chronic fatigue syndrome, the best exercises for thumb extension, and safe activity for patients with PICC lines. These aren’t about treating disease alone, but about restoring function and quality of life.

Complications and Safety First

Again and again, clinicians asked not just “Does it work?” but “What could go wrong?”

- Can proton pump inhibitors cause myalgia?

- Is intracameral cefuroxime safe in penicillin-allergic patients?

- What complications occur after augmentation mastopexy or breast reduction?

This emphasis on adverse effects shows how safety considerations shape clinical decisions as much as effectiveness.

Beyond the Bedside

Not all questions were about patient management. Some reached into the systems that underpin healthcare:

- How does plagiarism in nursing programs impact education quality?

- What are the benefits of grounded theory research in healthcare?

- Does using a template reduce variation in nursing records?

These reflect a broader concern with the integrity of training, the quality of evidence, and the consistency of documentation.

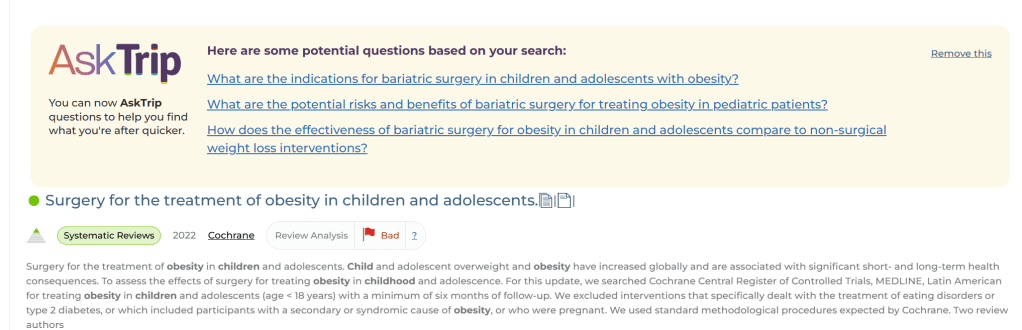

Children and Adolescents in Focus

Children and young people also featured prominently:

- Do sleep disorders contribute to anxiety and depression?

- What’s the evidence for scoliosis screening in Europe?

- Are team sports or meditation beneficial for children?

- How should screen time be limited?

These queries show clinicians thinking beyond immediate symptoms, grappling with prevention, wellbeing, and the challenges of modern childhood.

Non-Pharmacological Interventions

About one in six questions were not about drugs at all, but about lifestyle, rehabilitation, or supportive care.

- What lifestyle changes can slow cognitive decline?

- How should screen time be managed in children?

- What is the role of exercise in chronic fatigue syndrome?

- What are the most effective prehabilitation interventions before surgery?

This cluster shows a strong appetite for evidence beyond prescribing — emphasising prevention, recovery, and wellbeing.

What Stands Out from 113 Questions

Looking across the day’s record activity, three things stand out:

- Breadth of curiosity – From thumb exercises to the global burden of dementia, clinicians are asking at every scale.

- Safety vs efficacy – Many questions probed not “does it work?” but “is it safe?”

- System-level thinking – Alongside bedside care, clinicians are worried about education, documentation, and societal health.

Why This Matters

Guidelines and textbooks provide frameworks, but frontline clinicians constantly face edge cases, overlaps, and grey zones. The 113 questions asked yesterday show where evidence support is most needed — in diabetes, dementia, oncology, paediatrics, and in the systems that support safe care.

Closing Thought

Clinical questions aren’t abstract. They emerge from real patients, puzzling scans, unexpected complications, and the human urge to do better. Yesterday’s record-breaking 113 questions are more than just a number — they’re a window into the everyday challenges of healthcare, and a reminder that curiosity is alive and well in medicine.

At AskTrip, we’re proud to help clinicians find answers to those questions — big and small — that matter most to their patients.

Recent Comments